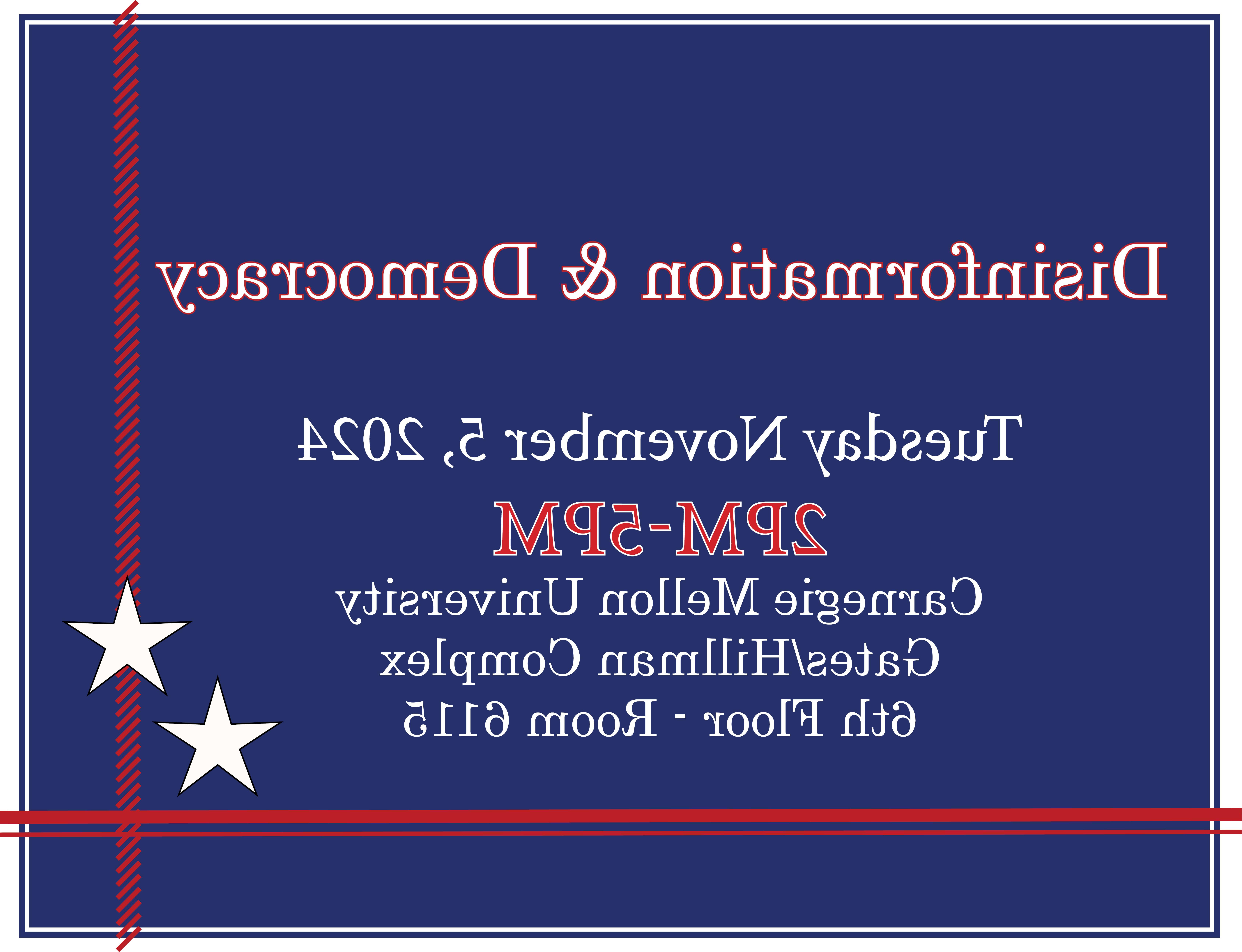

Join us for 'Disinformation & Democracy' an informative demonstration and discussion about disinformation and it's influence in our democracy and elections organized by the IDeaS center. The event will feature informative presentations from faculty and student researchers about their disinformation research at CMU. Attendees will be able to engage in Q&A with presenters and have the opportunity to see demonstrations of software tools used for identifying and countering online harms including disinformation. Program information and agenda is below. Demonstrations will take place before and after panels for in-person participants to explore. Panels will be livestreamed during the event but Q&A will not be available for those attending virtually. Please use the link below to register for virtual participation. No registration is needed for in-person participants.

REGISTER for VIRTUAL PARTICIPATION

PROGRAM AGENDA

2pm-2:30pm, Tool and Technology Demonstrations

AESOP (Demonstration by Matthew Hicks)

We often conduct games or exercises for the information environment , such as a game for seeing whether people can recognize that someone is trying to influence their opinions. Such games or exercises employ a scenario which specifies what people are talking about what kind of messages are being sent, by whom, and so on. AESOP is a scenario generation tool that uses large language models to help the game or exercise designer develop a scenario, and that generates all persona (actors and their characteristics), news stories, press releases, and guidelines for social media. In this demo you will see AESOP in action and be able to try it.

- ORA & BEND (Demonstrated by Rebecca Marigliano)

- NetMapper (Demonstrated by Dawn Robertson)

- Bot Tools (Demonstrated by Lynnette Ng)

- Sway (Presented by Simon Cullen)

Sway, a research project developed at Carnegie Mellon University by Simon Cullen and Nicholas DiBella, is an AI-facilitated group chat platform for student discussions. Sway aims to create online environments where students can discuss controversial issues more constructively and help people have better discussions across deep political and moral divides. In an early pilot study, 120 participants spent 30 minutes on Sway discussing a polarizing topic such as the 2020 election, January 6, Israel-Palestine, or U.S. abortion policy. Find out more at http://www.swaybeta.ai.

2:30pm-3:30pm, Panel - Countering Disinformation

Panelists

Uttara’s research lies at the intersection of technology, business, and policy. Her research focuses on technology’s impact on society. Her work is largely interdisciplinary: she combines economic and experimental methods with AI to study digital platforms and consumer behavior. She leverages large-scale data and novel AI methods to analyze the data to identify patterns in consumer behavior. Her research informs managerial practice to build and sustain consumer trust in digital products while balancing the realities of revenue and user engagement. She is especially interested in the design of inclusive and socially responsible digital products and platform policies and, in her research, evaluates how they can transform consumer behavior, both online and offline.

Evan Williams

Evan Williams

Evan Williams is a fourth-year PhD Student at Carnegie Mellon University advised by Dr. Kathleen Carley. He formerly worked with the Department of State's Global Engagement Center on efforts to identify international state-backed disinformation campaigns. His research aims to better understand how users access misinformation on search engines. He also works on building models that can help search engines improve the reliability of returned results.

Chris Labash is an Associate Professor of Communication and Innovation in Carnegie Mellon's Heinz College. He is also Managing Director of ConsultingLab, a course, lab, and research project that works on consulting engagements with such local and national clients as the Carnegie Science Center, the City of Philadelphia, the City of Pittsburgh, FedEx, The Louisville Orchestra, the State of Pennsylvania, and many others. His current work, in addition to his teaching, is the development of a research project entitled, "Designing for Truth," which looks at how applying principles of communication, influence, persuasion, advertising and design might help audiences better trust accurate information as it is communicated, and marginalize or dispel misinformation and disinformation.

3:30pm-4:30pm, Panel - AI, Elections and Disinformation

Panelists

Hoda Heidari is the K&L Gates Career Development Assistant Professor in Ethics and Computational Technologies at Carnegie Mellon University with joint appointments in the Machine Learning Department and the Institute for Software, Systems, and Society. She is also affiliated with the Human-Computer Interaction Institute and the Heinz College of Information Systems and Public Policy at CMU, and co-leads the university-wide Responsible AI Initiative and the K&L Gates Initiative for Ethics and Computational Technologies. Her research is broadly concerned with the social, ethical, and economic implications of Artificial Intelligence, particularly issues of fairness and accountability through the use of Machine Learning in socially consequential domains. She is particularly interested in translating research contributions into positive impact on AI policy and practice.

Hong Shen an Assistant Research Professor in the Human-Computer Interaction Institute at Carnegie Mellon University, where she directs the CARE (Collective AI Research and Evaluation) Lab. Prof. Shen is an interdisciplinary scholar situated at the intersection of human-computer interaction, communications, and public policy. Broadly, she studies the social, ethical and policy implications of digital platforms and algorithmic systems, with a strong emphasis on bias, fairness, social justice and power relations in Artificial Intelligence and Machine Learning.

Christine Lepird is a Societal Computing PhD student at Carnegie Mellon University’s Software and Societal Systems Department (S3D) in the School of Computer Science and Knight Fellow at the IDeaS Center. Christine's primary area of research is in social network analysis - a field of study that investigates social structures through ties of various strengths. While the field has been around for decades, Christine applies the principles to newer platforms, like Reddit and Facebook, to differentiate between organic communities and malicious networks that post misinformation. One of her main topics of study is pink slime, a type of inauthentic local news that has been spreading across the United States online news ecosystem.

Kathleen M. Carley is a professor in the School of Computer Science in the Software and Societal Systems Department at Carnegie Mellon University with courtesy appointments at Engineering and Public Policy, Heinz School, Electrical and Computer Engineering and GSIA. She is the director of the Center for Computational Analysis of Social and Organizational Systems (CASOS), a university wide interdisciplinary center that brings together network analysis, computer science, and organization science and the director of the Center for Informed DEmocracy and Social cybersecurity (IDeaS), a university wide transdisciplinary center for disinformation, hate and extremism online. Kathleen M. Carley's research combines cognitive science, network science, computer science and social science to address complex organizational, social and cultural problems. Her specific research areas include dynamic network analysis, computational social and organization theory, network adaptation and evolution, social cybersecurity, and online behavior during times of upheaval (e.g., disasters and elections). She and her students have developed infrastructure tools for analyzing large scale dynamic networks, social media and various multi-agent simulation systems.

4:30pm-5:00pm, Tool and Technology Demonstrations

AESOP (Demonstration by Matthew Hicks)

We often conduct games or exercises for the information environment , such as a game for seeing whether people can recognize that someone is trying to influence their opinions. Such games or exercises employ a scenario which specifies what people are talking about what kind of messages are being sent, by whom, and so on. AESOP is a scenario generation tool that uses large language models to help the game or exercise designer develop a scenario, and that generates all persona (actors and their characteristics), news stories, press releases, and guidelines for social media. In this demo you will see AESOP in action and be able to try it.

- ORA & BEND (Demonstrated by Rebecca Marigliano)

ORA is a network analysis and visualization engine that can be usedfor any kind of data that can be represented as nodes and relations.One of the key features of ORA is that it enables rapid analysis ofsocial media data such as X, Reddit, Telegram and Facebook. BEND is aframework for assessing who is influencing whom in what way and towhat effect. Within ORA you can assess messages to see whether theauthors of those posts are trying to influence someone, in what way,and whether it is effective. In this demo you will be able to see ORAin operation and the BEND framework. A class on network analytics including there use for social mediaanalytics will be taught by Dr. Carley in Spring 2025 and you can signup for it - undergraduates or masters 17-685 , PhD students - 17-801.

- NetMapper (Demonstrated by Dawn Robertson)

- Bot Tools (Demonstrated by Lynnette Ng)

- Sway (Presented by Simon Cullen)

Sway, a research project developed at Carnegie Mellon University by Simon Cullen and Nicholas DiBella, is an AI-facilitated group chat platform for student discussions. Sway aims to create online environments where students can discuss controversial issues more constructively and help people have better discussions across deep political and moral divides. In an early pilot study, 120 participants spent 30 minutes on Sway discussing a polarizing topic such as the 2020 election, January 6, Israel-Palestine, or U.S. abortion policy. Find out more at http://www.swaybeta.ai.